Custom LLMs Cut Financial Document Review Time by 70%: Here's How

Financial institutions process millions of documents daily, including loan applications, compliance forms, contracts, and disclosures. Yet, despite decades of automation efforts, most of this work still relies on manual reviews or brittle rule-based systems. Why? Financial documents are notoriously complex, highly variable, and deeply contextual. Traditional tools like OCR and RPA can’t handle the nuance. Even generic AI models often stumble.

The need for smarter document intelligence is growing urgent. Use cases like loan origination, KYC/AML checks, and regulatory filings generate serious operational drag when delays and errors creep in. Backlogs grow. Compliance risks multiply.

This is where custom large language models (LLMs) offer a fresh path forward. But not as magic replacements for humans or plug-and-play tools. They work when designed for specific data, workflows, and compliance needs. This post walks through why financial document processing needs a rethink, what custom LLMs actually involve, and how to implement them with eyes wide open.

Let’s break it down.

What Are Custom LLMs?

A custom LLM is a large language model trained or adapted to perform tasks in a particular domain, like finance, using data, language, and formats unique to that context. Unlike generic models trained on internet-scale content, these models "speak the language" of SEC filings, bank forms, or underwriting policies.

Custom vs. Open-Source vs. Proprietary Models

“Open-source” and “proprietary” describe licensing and hosting options, not capability by default. You can build custom systems on either. What matters is control over data, auditability, and how reliably the system follows your required outputs.

Fine-Tuning vs. Prompt Engineering vs. RAG

- Fine-tuning: Updating model weights with labeled examples.

- Prompt engineering: Designing input templates to guide output.

- Retrieval-augmented generation (RAG): Supplementing prompts with dynamic documents at inference time.

The right combination depends on the task and compliance needs. Financial data is not just technical it’s legal, regulated, and sensitive. That’s why off-the-shelf models often fail to meet the bar.

Don’t assume all LLMs are equal. One tuned for social media sentiment won’t help with forensic accounting.

Document Types LLMs Are Transforming

Not all documents create the same workload. LLM value rises when documents carry business-critical meaning that traditional extraction cannot reliably capture.

Structured vs. semi-structured vs. unstructured

- Structured: Fixed fields and layouts (some digital forms). Traditional extraction often works here.

- Semi-structured: Repeating patterns with variation (loan applications, KYC forms, statements). This is where rules start breaking.

- Unstructured: Free text with legal and narrative complexity (contracts, policies, filings, emails, claim descriptions).

High-Impact Document Types

LLMs increasingly support:

- Forms: KYC onboarding packets, loan apps, change-of-address, beneficiary updates.

- Contracts and agreements: ISDA schedules, credit agreements, underwriting terms, vendor contracts.

- Reports and filings: 10-K/10-Q, risk disclosures, offering memoranda, rating agency reports.

- Claims and case files: Insurance claims, supporting evidence, adjuster notes, medical summaries.

- Operational documents: SOPs, policy updates, audit evidence packs.

Why Processing Needs Differ

Some tasks require classification and routing (send to the right queue). Others demand extraction into a strict schema (names, amounts, dates). Others need summarization with citations for analysts. A mortgage file, for example, combines scanned paystubs, bank statements, appraisal reports, and disclosures, each with a different structure, quality, and exception patterns.

The practical takeaway:

Document automation succeeds when you align model tactics to document variability, not when you pick a single “smart” tool and hope it generalizes.

Key Use Cases in Financial Services

Custom LLMs don’t just summarize text, they perform targeted tasks that drive operational efficiency and risk reduction.

1. Document Classification and Routing

LLMs can read incoming documents (email attachments, portal uploads) and classify them by type or urgency. This enables automated routing to the correct queue or analyst.

2. Intelligent Data Extraction

Extracting structured data from messy PDFs or scanned documents is a core strength, especially when models are trained on domain-specific formats.

3. Compliance and Audit Support

LLMs assist by flagging missing disclosures, reconciling figures, or checking against regulatory frameworks. They become intelligent companions for auditors and legal teams.

4. KYC/AML Enhancements

Automate adverse media checks, extract watchlist data, or summarize identity documents to streamline customer verification.

5. Analyst-Facing Summarization

Summarize long earnings reports or ESG filings into digestible overviews that save hours of analyst time.

⮕ Real-world example: Using an LLM to extract ESG commitments from 10-Ks across a portfolio saves compliance teams weeks of manual review.

It’s a mistake to pigeonhole LLMs as “just summarizers.” Their real power lies in layered workflows.

Comparing General vs. Custom LLMs for Finance

Not all models are fit for regulated environments. Here’s how general-purpose models fall short and why custom versions outperform.

Limitations of Generic LLMs

Generic models often struggle with:

- Schema discipline: returning the exact fields, types, and formats your systems require

- Firm-specific language: internal product names, control IDs, and exception categories

- Document artifacts: tables, footnotes, multi-column layouts, scanned images, and addenda

- Edge cases: amended filings, unusual covenants, non-standard disclosures

- Repeatability: slight prompt changes leading to different outputs

They also raise governance challenges. If a model cannot reliably cite sources or explain its reasoning path, audit teams will not trust it.

Advantages of Domain-Specific Fine-Tuning

Customization improves:

- Consistency: fewer formatting errors and fewer “almost right” extractions

- Coverage: better handling of domain tokens (tickers, SEC forms, legal clauses, policy terms)

- Grounding: stronger linkage to approved documents via RAG

- Control: clearer versioning, testing, and change management

Example:

A tuned workflow can reliably recognize SEC form sections and extract ESG metrics from filings while separating reported values from forward-looking statements.

Pitfall to avoid: assuming a base model “fits” regulated workflows out of the box. Regulated work needs determinism where possible, and controlled uncertainty where not.

Architecture of a Custom LLM Document Workflow

Deploying LLMs isn’t just “plug it in.” A robust architecture ensures quality, speed, and reliability.

Input Preprocessing

- OCR for scanned documents

- Language detection

- Document type classification

Model Layers

Most systems use layered behavior:

- Prompt templates for each document type and task, with strict output schemas

- RAG to pull approved definitions, policy language, product rules, and prior decisions

- Fallback strategies when confidence drops (alternate prompts, smaller models, or rule-based checks)

Output Validation

- Confidence scoring to flag uncertain outputs

- Human-in-the-loop (HITL) reviews for critical tasks

- Logging and versioning for compliance review

Integration with Internal Systems

- API endpoints to push/pull data

- Hooks into CRMs, risk engines, or reporting tools

- Role-based access controls

A full pipeline connects raw documents to decision-making securely and scalably. Python developers typically handle model orchestration, data pipelines, and evaluation logic. DevOps and infrastructure engineers often use tools like Ansible and Terraform to manage deployment, scaling, and environment consistency.

Implementation Guide: From POC to Production

Many LLM projects stall after the demo phase. Here’s how to move from proof of concept to enterprise-grade deployment.

Data Requirements

- Labeled examples for fine-tuning or eval

- Redaction of personally identifiable information (PII)

- Annotation tools for efficient labeling

Evaluation Benchmarks

- Accuracy of extractions (F1, precision/recall)

- Hallucination rate on known queries

- Latency and throughput under load

Choosing a Model

Model choice depends on constraints:

- Commercial APIs: fast to start, strong baseline quality, vendor governance needs

- Open-source models: higher control, heavier engineering and security work

- Hybrid: keep sensitive steps internal while using external models for lower-risk tasks

Deployment Options

- SaaS platforms (quick start, less flexibility)

- API integration (middleware with business logic)

- On-prem or private cloud (max data control)

Legal teams often underestimate how much review is needed before launch. Get them involved early. Also, DevOps developers use automation tools such as Ansible to enforce security, configuration, and release consistency.

Risks, Limitations & Governance Considerations

A document model can create risk even when it “works,” because errors propagate into downstream decisions.

Regulatory exposure and auditability

Regulators and internal audit functions will ask:

- What data trained the system?

- What sources did it use for each output?

- Can you reproduce results for a past decision?

- How do you prevent unauthorized data retention?

If you cannot answer those questions cleanly, you create governance debt.

Hallucinations and False Positives

An LLM might “confidently” extract a risk indicator that isn’t there. Guardrails like confidence thresholds and fallback logic are essential.

Model Drift and Version Control

As regulations and data shift, model performance can degrade. Regular retraining and A/B testing help maintain accuracy.

Data Privacy and Retention

Ensure adherence to data retention policies and region-specific privacy laws (e.g., GDPR, GLBA).

⮕ Consider the risk of a hallucinated extraction falsely flagging a customer for fraud—an issue with downstream legal implications.

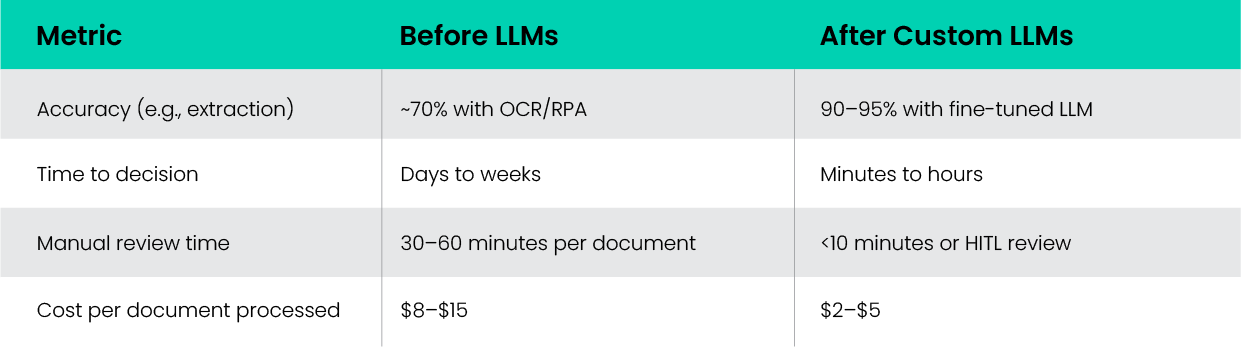

Metrics for Measuring LLM Impact in Document Processing

To justify investment, teams need tangible metrics across operational, compliance, and business dimensions.

Other metrics:

- Reduction in compliance backlog

- Analyst time saved per quarter

- Decrease in customer onboarding time

Track these KPIs to iterate and demonstrate ROI.

Emerging Trends and What’s Next

Custom LLMs are evolving fast. Keep an eye on these trends:

Multi-Modal Document Understanding

New models handle not just text, but images, charts, and scanned forms—unlocking new processing capabilities.

Model Compression for Edge Deployment

Smaller, faster models allow on-device or branch-level deployment, critical for latency-sensitive tasks.

Synthetic Data for Fine-Tuning

Generate labeled examples using AI to overcome data scarcity—especially for rare or sensitive document types.

AI Agents in Document Workflows

Autonomous agents that combine multiple LLM calls, trigger downstream actions, or assign HITL review dynamically.

These advances make the document stack smarter, faster, and more autonomous.

Conclusion

The complexity of financial documents isn’t going away. But our tools can get smarter. Custom LLMs represent a shift from brittle automation to context-aware intelligence when implemented thoughtfully. The key is aligning technical capability with regulatory reality and operational goals.

Finance teams that treat LLMs as long-term partners, not one-off projects, will unlock compounding value: faster decisions, fewer errors, more agile compliance.

Custom LLM initiatives in finance increasingly sit at the intersection of machine learning, backend engineering, infrastructure automation, and frontend application development. Organizations like Amrood Labs are helping financial institutions make this leap not with hype, but with grounded, domain-aware solutions. The future isn’t just paperless. It’s intelligent.